1. 주요 레이어

- Conv2D

- tensorflow.keras.layers.Conv2D

- tf.nn.conv2d

import tensorflow as tf

from tensorflow.keras.layers import Conv2D

import matplotlib.pyplot as plt

import numpy as np

from sklearn.datasets import load_sample_image

china = load_sample_image('china.jpg') / 255.

print(china.dtype)

print(china.shape)

# 출력 결과

float64

(427, 640, 3)

plt.imshow(china)

plt.show

flower = load_sample_image('flower.jpg') / 255.

print(flower.dtype)

print(flower.shape)

# 출력 결과

float64

(427, 640, 3)

plt.imshow(flower)

plt.show()

images = np.array([china, flower])

batch_size, height, width, channels = images.shape

print(images.shape)

# 출력 결과

(2, 427, 640, 3)# 필터 적용

filters = np.zeros(shape = (7, 7, channels, 2), dtype = np.float32)

# 수직선 추가

filters[:, 3, :, 0] = 1

# 수평선 추가

filters[3, :, :, 1] = 1

print(filters.shape)

# 출력 결과

(7, 7, 3, 2)# 텐서플로우로 conv2d 사용하는 방법

outputs = tf.nn.conv2d(images, filters, strides = 1, padding = 'SAME')

print(outputs.shape)

plt.imshow(outputs[0, :, :, 1], cmap = 'gray')

plt.show()

# 출력 결과

(2, 427, 640, 2)

plt.imshow(outputs[0, :, :, 0], cmap = 'gray')

plt.show()

# keras로 conv2d 사용하는 방법

conv = Conv2D(filters = 32, kernel_size = 3, strides = 1,

padding = 'same', activation = 'relu')

- MaxPool2D

- 텐서플로우 저수준 딥러닝 API

- tf.nn.max_pool

- 사용자가 사이즈를 맞춰줘야함

- keras의 모델의 층으로 사용하고 싶으면 Lambda 층으로 감싸줘야함

- Keras 고수준 API

- keras.layers.MaxPool2D

import tensorflow as tf

from tensorflow.keras.layers import MaxPool2D, Lambda

output = tf.nn.max_pool(images,

ksize = (1, 1, 1, 3),

strides = (1, 1, 1, 3),

padding = 'VALID')

# 텐서플로우에서 max pool 사용하는 방법

output_keras = Lambda(

lambda X: tf.nn.maxpool(X, ksize = (1, 1, 1, 3), strides = (1, 1, 1, 3), padding = 'VALID')

)

# 케라스에서 max pool 사용하는 방법

max_pool = MaxPool2D(pool_size = 2)flower = load_sample_image('flower.jpg') / 255.

print(flower.dtype)

print(flower.shape)

# 출력 결과

float64

(427, 640, 3)

# 차원 추가

flower = np.expand_dims(flower, axis = 0)

flower.shape

# 출력 결과

(1, 427, 640, 3)

# pool size를 2로 maxpool 적용으로 데이터 수는 1/2

output = Conv2D(filters = 32, kernel_size = 3, strides = 1, padding = 'SAME', activation = 'relu')(flower)

output = MaxPool2D(pool_size = 2)(output)

output.shape

# 출력 결과

TensorShape([1, 213, 320, 32])plt.imshow(output[0, :, :, 8], cmap = 'gray')

plt.show()

- AvgPool2D

- 텐서플로우 저수준 딥러닝 API

- tf.nn.avg_pool

- 케라스 고수준 API

- keras.layers.AvgPool2D

from tensorflow.keras.layers import AvgPool2D

# 원본

flower.shape

# 출력 결과

(1, 427, 640, 3)

# AvgPool 적용(데이터 크기 1/2)

output = Conv2D(filters = 32, kernel_size = 3, strides = 1, padding = 'SAME', activation = 'relu')(flower)

output = AvgPool2D(pool_size = 2)(output)

output.shape

# 출력 결과

TensorShape([1, 213, 320, 32])plt.imshow(output[0, :, : , 8], cmap = 'gray')

plt.show()

- GlobalAvgPool2D(전역 평균 풀링 층)

- keras.layers.GlobalAvgPool2D()

- 특징 맵 각각의 평균값을 출력하는 것이므로, 특성맵에 있는 대부분의 정보를 잃음

- 출력층에는 유용할 수 있음

from tensorflow.keras.layers import GlobalAvgPool2D

output = Conv2D(filters = 32, kernel_size= 3, strides = 1, padding = 'SAME', activation = 'relu')(flower)

output = GlobalAvgPool2D()(output)

output.shape

# 출력 결과

TensorShape([1, 32])

2. 예제로 보는 CNN 구조와 학습

● 일반적인 구조

- modules import

%load_ext tensorboard

import datetime

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.keras import Model

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten, Conv2D, MaxPool2D, AvgPool2D, Dropout

from tensorflow.keras import datasets

from tensorflow.keras.utils import to_categorical, plot_model

- 데이터 로드 및 전처리

(x_train, y_train), (x_test, y_test) = datasets.fashion_mnist.load_data()

# 원본 데이터 형태

print(x_train.shape)

print(y_train.shape)

print(x_test.shape)

print(y_test.shape)

# 출력 결과

(60000, 28, 28)

(60000,)

(10000, 28, 28)

(10000,)

# x 데이터에 축 하나씩 추가

x_train = x_train[:, :, :, np.newaxis]

x_test = x_test[:, :, :, np.newaxis]

print(x_train.shape)

print(x_test.shape)

# 출력 결과

(60000, 28, 28, 1)

(10000, 28, 28, 1)

# y 데이터 카테고리화

num_classes = 10

y_train = to_categorical(y_train, num_classes)

y_test = to_categorical(y_test, num_classes)

print(y_train.shape)

print(y_test.shape)

# 출력 결과

print(y_train.shape)

print(y_test.shape)

# x 데이터 표준화

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255.

x_test /= 255.

- CNN을 위한 간단한 모델

def build():

model = Sequential([Conv2D(64, 7, activation = 'relu', padding = 'same', input_shape = [28, 28, 1]),

MaxPool2D(pool_size = 2),

Conv2D(128, 3, activation = 'relu', padding = 'same'),

MaxPool2D(pool_size = 2),

Conv2D(256, 3, activation = 'relu', padding = 'SAME'),

MaxPool2D(pool_size = 2),

Flatten(),

Dense(128, activation = 'relu'),

Dropout(0.5),

Dense(64, activation = 'relu'),

Dropout(0.5),

Dense(10, activation = 'softmax')])

return model

- 모델 컴파일

model = build()

model.compile(optimizer = 'adam',

loss = 'categorical_crossentropy',

metrics = ['accuracy'])model.summary()

# 출력 결과

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_11 (Conv2D) (None, 28, 28, 64) 3200

max_pooling2d_9 (MaxPooling (None, 14, 14, 64) 0

2D)

conv2d_12 (Conv2D) (None, 14, 14, 128) 73856

max_pooling2d_10 (MaxPoolin (None, 7, 7, 128) 0

g2D)

conv2d_13 (Conv2D) (None, 7, 7, 256) 295168

max_pooling2d_11 (MaxPoolin (None, 3, 3, 256) 0

g2D)

flatten_2 (Flatten) (None, 2304) 0

dense_6 (Dense) (None, 128) 295040

dropout_4 (Dropout) (None, 128) 0

dense_7 (Dense) (None, 64) 8256

dropout_5 (Dropout) (None, 64) 0

dense_8 (Dense) (None, 10) 650

=================================================================

Total params: 676,170

Trainable params: 676,170

Non-trainable params: 0

_________________________________________________________________plot_model(model)

- Hyper Parameters

callbacks = [tf.keras.callbacks.TensorBoard(log_dir = './logs')]

EPOCHS = 20

BATCH_SIZE = 200

VERBOSE = 1

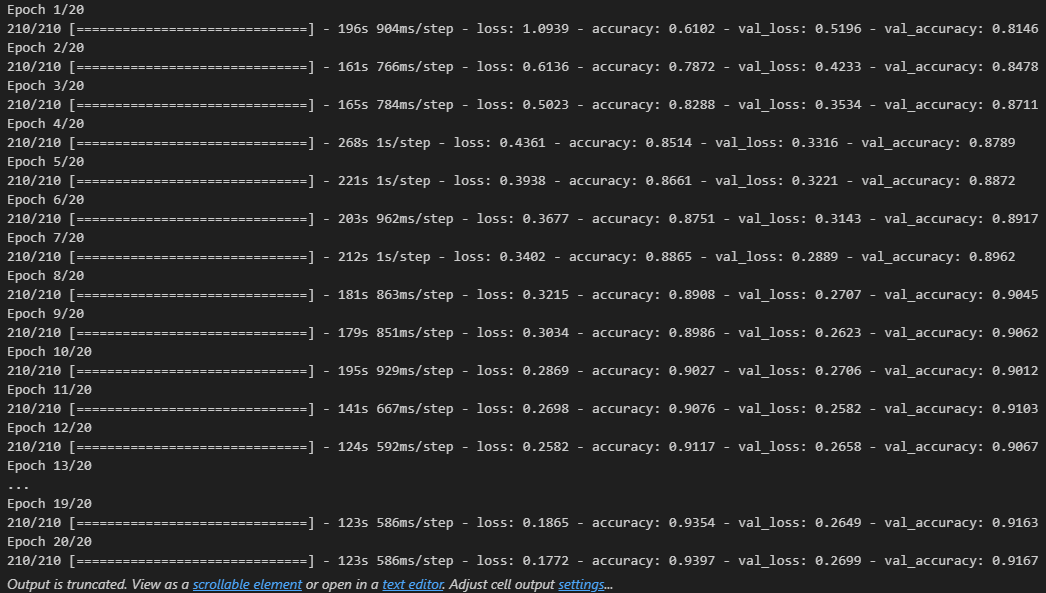

- 모델 학습(GPU 추천)

- validation_split을 통해 검증 데이터셋을 생성

hist = model.fit(x_train, y_train,

epochs = EPOCHS,

batch_size = BATCH_SIZE,

validation_split = 0.3,

callbacks = callbacks,

verbose = VERBOSE)

- 텐서보드로 확인

log_dir = '.logs' + datetime.datetime.now().strftime('%Y%m%d-%H%M%S')

%tensorboard --logdir logs/

● LeNet-5(코드 출처: https://datahacker.rs/lenet-5-implementation-tensorflow-2-0/)

- CNN의 초창기 모델

- 필기체 인식을 위한 모델

- modules import

import datetime

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.keras import Model

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten, Conv2D, MaxPool2D, AvgPool2D, Dropout

from tensorflow.keras import datasets

from tensorflow.keras.utils import to_categorical, plot_model

from sklearn.model_selection import train_test_split

- 데이터 로드 및 전처리

(x_train_full, y_train_full), (x_test, y_test) = datasets.mnist.load_data()

x_train, x_val ,y_train, y_val = train_test_split(x_train_full, y_train_full, test_size = 0.3, random_state = 777)

x_train = x_train[..., np.newaxis]

x_val = x_val[..., np.newaxis]

x_test = x_test[..., np.newaxis]

num_classes = 10

y_train = to_categorical(y_train, num_classes)

y_val = to_categorical(y_val, num_classes)

y_test = to_categorical(y_test, num_classes)

x_train = x_train.astype('float32')

x_val = x_val.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255.

x_val /= 255.

x_test /= 255.

print(x_train.shape)

print(y_train.shape)

print(x_val.shape)

print(y_val.shape)

print(x_test.shape)

print(y_test.shape)

# 출력 결과

(42000, 28, 28, 1)

(42000, 10)

(18000, 28, 28, 1)

(18000, 10)

(10000, 28, 28, 1)

(10000, 10)

- 모델 구성 및 컴파일

class LeNet(Sequential):

def __init__(self, input_shape, nb_classes):

super().__init__()

self.add(Conv2D(6, kernel_size = (5, 5), strides = (1, 1), activation = 'tanh', input_shape = input_shape, padding = 'SAME'))

self.add(AvgPool2D(pool_size = (2, 2), strides = (2, 2), padding = 'valid'))

self.add(Conv2D(16, kernel_size = (5, 5), strides = (1, 1), axtivation = 'tanh', padding = 'valid'))

self.sdd(AvgPool2D(pool_size = (2, 2), strides = (2, 2), padding = 'valid'))

self.add(Flatten())

self.add(Dense(120, activation = 'tanh'))

self.add(Dense(84, activation = 'tanh'))

self.add(Dense(nb_classes, activation = 'softmax'))

self.compile(optimizer = 'adam',

loss = 'categorical_crossentropy',

metrics = ['accuracy'])

model = LeNet(input_shape = (28, 28, 1), nb_classes = 10)

model.summary()

# 출력 결과

Model: "le_net_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_17 (Conv2D) (None, 28, 28, 6) 156

average_pooling2d_3 (Averag (None, 14, 14, 6) 0

ePooling2D)

conv2d_18 (Conv2D) (None, 10, 10, 16) 2416

average_pooling2d_4 (Averag (None, 5, 5, 16) 0

ePooling2D)

flatten_3 (Flatten) (None, 400) 0

dense_9 (Dense) (None, 120) 48120

dense_10 (Dense) (None, 84) 10164

dense_11 (Dense) (None, 10) 850

=================================================================

Total params: 61,706

Trainable params: 61,706

Non-trainable params: 0

_________________________________________________________________plot_model(model, show_shapes = True)

- Hyper Parameters

EPOCHS = 20

BATHC_SIZE = 128

VERBOSE = 1

- 모델 학습

hist = model.fit(x_train, y_train,

epochs = EPOCHS,

batch_size = BATCH_SIZE,

validation_data = (x_val, y_val),

verbose = VERBOSE)

- 학습 결과 시각화

plt.figure(figsize = (12, 6))

plt.subplot(1, 2, 1)

plt.plot(hist.history['loss'], 'b-', label = 'loss')

plt.plot(hist.history['val_loss'], 'm--', label = 'val_loss')

plt.xlabel('Epochs')

plt.grid()

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(hist.history['accuracy'], 'g-', label = 'accuracy')

plt.plot(hist.history['val_accuracy'], 'r-', label = 'val_accuracy')

plt.xlabel('Epochs')

plt.grid()

plt.legend()

plt.show()

- 모델 평가

model.evaluate(x_test, y_test)

# 출력 결과

313/313 [==============================] - 3s 7ms/step - loss: 0.0564 - accuracy: 0.9854

[0.0564129501581192, 0.9854000210762024]'Python > Deep Learning' 카테고리의 다른 글

| [딥러닝-케라스] 케라스 CIFAR10 CNN 모델 (0) | 2023.05.16 |

|---|---|

| [딥러닝-케라스] 케라스 Fashion MNIST CNN 모델 (0) | 2023.05.14 |

| [딥러닝-텐서플로우] 텐서플로우 Data API (1) | 2023.05.12 |

| [딥러닝-케라스] 케라스의 다양한 학습 기술 (0) | 2023.05.11 |

| [딥러닝-케라스] 케라스 Fashion MNIST 모델 (0) | 2023.05.08 |