1. modules import

%load_ext tensorboard

import datetime

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.keras import Model

from tensorflow.keras.models import Sequential

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.datasets.fashion_mnist import load_data

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.layers import Dense, Conv2D, MaxPool2D, Dropout, Input, Flatten

2. 데이터 로드 및 전처리

(x_train, y_train), (x_test, y_test) = load_data()

x_train = x_train[..., np.newaxis]

x_test = x_test[..., np.newaxis]

x_train = x_train / 255.

x_test = x_test / 255.

print(x_train.shape)

print(y_train.shape)

print(x_test.shape)

print(y_test.shape)

# 출력 결과

(60000, 28, 28, 1)

(60000,)

(10000, 28, 28, 1)

(10000,)class_names = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']

3. 모델 구성 및 컴파일

def build_model():

model = Sequential()

input = Input(shape = (28, 28, 1))

output = Conv2D(filters = 32, kernel_size = (3, 3))(input)

output = Conv2D(filters = 64, kernel_size = (3, 3))(output)

output = Conv2D(filters = 64, kernel_size = (3, 3))(output)

output = Flatten()(output)

output = Dense(units = 128, activation = 'relu')(output)

output = Dense(units = 64, activation = 'relu')(output)

output = Dense(units = 10, activation = 'softmax')(output)

model = Model(inputs = [input], outputs = [output])

model.compile(optimizer = 'adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['acc'])

return model

model_1 = build_model()

model_1.summary()

# 출력 결과

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 28, 28, 1)] 0

conv2d (Conv2D) (None, 26, 26, 32) 320

conv2d_1 (Conv2D) (None, 24, 24, 64) 18496

conv2d_2 (Conv2D) (None, 22, 22, 64) 36928

flatten (Flatten) (None, 30976) 0

dense (Dense) (None, 128) 3965056

dense_1 (Dense) (None, 64) 8256

dense_2 (Dense) (None, 10) 650

=================================================================

Total params: 4,029,706

Trainable params: 4,029,706

Non-trainable params: 0

_________________________________________________________________

4. 모델 학습

hist_1 = model_1.fit(x_train, y_train,

epochs = 25,

validation_split = 0.3,

batch_size = 128)

5. 학습 결과 시각화

plt.figure(figsize = (12, 4))

plt.subplot(1, 2, 1)

plt.plot(hist_1.history['loss'], 'b--', label = 'loss')

plt.plot(hist_1.history['val_loss'], 'r:', label = 'val_loss')

plt.xlabel('Epochs')

plt.grid()

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(hist_1.history['acc'], 'b--', label = 'accuracy')

plt.plot(hist_1.history['val_acc'], 'r:', label = 'val_accuracy')

plt.xlabel('Epochs')

plt.grid()

plt.legend()

6. 모델 평가

model_1.evaluate(x_test, y_test)

# 출력 결과

loss: 1.1168 - acc: 0.8566

[1.116817831993103, 0.8565999865531921]

7. 모델 재구성(학습 파라미터 수 비교)

def build_model_2():

model = Sequential()

input = Input(shape = (28, 28, 1))

output = Conv2D(filters = 32, kernel_size = (3, 3))(input)

output = MaxPool2D(strides = (2, 2))(output)

output = Conv2D(filters = 64, kernel_size = (3, 3))(output)

output = MaxPool2D(strides = (2, 2))(output)

output = Conv2D(filters = 64, kernel_size = (3, 3))(output)

output = MaxPool2D(strides = (2, 2))(output)

output = Flatten()(output)

output = Dense(units = 128, activation = 'relu')(output)

output = Dropout(0.3)(output)

output = Dense(units = 64, activation = 'relu')(output)

output = Dropout(0.3)(output)

output = Dense(units = 10, activation = 'softmax')(output)

model = Model(inputs = [input], outputs = [output])

model.compile(optimizer = 'adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['acc'])

return model

model_2 = build_model_2()

model_2.summary()

# 출력 결과

Model: "model_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_3 (InputLayer) [(None, 28, 28, 1)] 0

conv2d_6 (Conv2D) (None, 26, 26, 32) 320

max_pooling2d (MaxPooling2D (None, 13, 13, 32) 0

)

conv2d_7 (Conv2D) (None, 11, 11, 64) 18496

max_pooling2d_1 (MaxPooling (None, 5, 5, 64) 0

2D)

conv2d_8 (Conv2D) (None, 3, 3, 64) 36928

max_pooling2d_2 (MaxPooling (None, 1, 1, 64) 0

2D)

flatten_2 (Flatten) (None, 64) 0

dense_6 (Dense) (None, 128) 8320

dropout (Dropout) (None, 128) 0

dense_7 (Dense) (None, 64) 8256

dropout_1 (Dropout) (None, 64) 0

dense_8 (Dense) (None, 10) 650

=================================================================

Total params: 72,970

Trainable params: 72,970

Non-trainable params: 0

_________________________________________________________________- 학습 파라미터 수가 줄어듦

8. 모델 재학습

hist_2 = model_2.fit(x_train, y_train,

epochs = 25,

validation_split = 0.3,

batch_size = 128)

# 재학습 결과 시각화

plt.figure(figsize = (12, 4))

plt.subplot(1, 2, 1)

plt.plot(hist_2.history['loss'], 'b--', label = 'loss')

plt.plot(hist_2.history['val_loss'], 'r:', label = 'val_loss')

plt.xlabel('Epochs')

plt.grid()

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(hist_2.history['acc'], 'b--', label = 'accuracy')

plt.plot(hist_2.history['val_acc'], 'r:', label = 'val_accuracy')

plt.xlabel('Epochs')

plt.grid()

plt.legend()

- 처음 모델보다 학습데이터에 오버피팅이 덜 된 모습

9. 모델 재평가

model_2.evaluate(x_test, y_test)

# 출력 결과

loss: 0.4026 - acc: 0.8830

[0.4026452302932739, 0.8830000162124634]

10. 모델 성능 높이기(많은 레이어 쌓기)

from tensorflow.keras.layers import BatchNormalization, ReLU

def build_model_3():

model = Sequential()

input = Input(shape = (28, 28, 1))

output = Conv2D(filters = 32, kernel_size = 3, activation = 'relu', padding = 'same')(input)

output = Conv2D(filters = 64, kernel_size = 3, activation = 'relu', padding = 'valid')(output)

output = MaxPool2D(strides = (2, 2))(output)

output = Dropout(0.5)(output)

output = Conv2D(filters = 128, kernel_size = 3, activation = 'relu', padding = 'same')(output)

output = Conv2D(filters = 256, kernel_size = 3, activation = 'relu', padding = 'valid')(output)

output = MaxPool2D(strides = (2, 2))(output)

output = Dropout(0.5)(output)

output = Flatten()(output)

output = Dense(units = 256, activation = 'relu')(output)

output = Dropout(0.5)(output)

output = Dense(units = 100, activation = 'relu')(output)

output = Dropout(0.5)(output)

output = Dense(units = 10, activation = 'softmax')(output)

model = Model(inputs = [input], outputs = [output])

model.compile(optimizer = 'adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['acc'])

return model

model_3 = build_model_3()

model_3.summary()

# 출력 결과

Model: "model_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_4 (InputLayer) [(None, 28, 28, 1)] 0

conv2d_9 (Conv2D) (None, 28, 28, 32) 320

conv2d_10 (Conv2D) (None, 26, 26, 64) 18496

max_pooling2d_3 (MaxPooling (None, 13, 13, 64) 0

2D)

dropout_2 (Dropout) (None, 13, 13, 64) 0

conv2d_11 (Conv2D) (None, 13, 13, 128) 73856

conv2d_12 (Conv2D) (None, 11, 11, 256) 295168

max_pooling2d_4 (MaxPooling (None, 5, 5, 256) 0

2D)

dropout_3 (Dropout) (None, 5, 5, 256) 0

flatten_3 (Flatten) (None, 6400) 0

dense_9 (Dense) (None, 256) 1638656

dropout_4 (Dropout) (None, 256) 0

dense_10 (Dense) (None, 100) 25700

dropout_5 (Dropout) (None, 100) 0

dense_11 (Dense) (None, 10) 1010

=================================================================

Total params: 2,053,206

Trainable params: 2,053,206

Non-trainable params: 0

_________________________________________________________________

- 모델 학습 및 결과 시각화

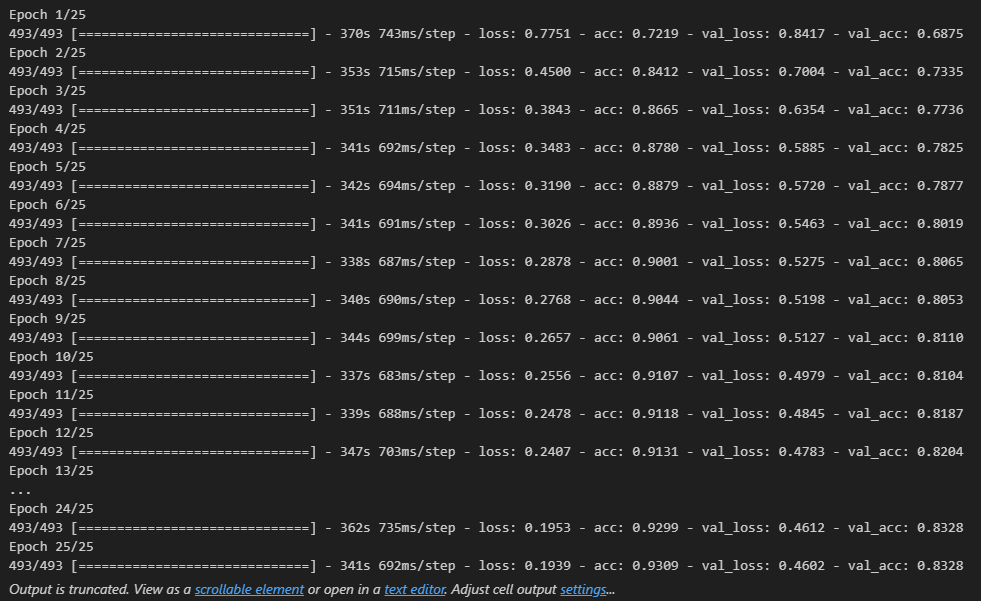

hist_3 = model_3.fit(x_train, y_train,

epochs = 25,

validation_split = 0.3,

batch_size = 128)

- 과적합은 되지 않았지만 층을 늘려도 좋은 성능을 낼 수 있음

plt.figure(figsize = (12, 4))

plt.subplot(1, 2, 1)

plt.plot(hist_3.history['loss'], 'b--', label = 'loss')

plt.plot(hist_3.history['val_loss'], 'r:', label = 'val_loss')

plt.xlabel('Epochs')

plt.grid()

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(hist_3.history['acc'], 'b--', label = 'accuracy')

plt.plot(hist_3.history['val_acc'], 'r:', label = 'val_accuracy')

plt.xlabel('Epochs')

plt.grid()

plt.legend()

model_3.evaluate(x_test, y_test)

# 출력 결과

loss: 0.2157 - acc: 0.9261

[0.21573999524116516, 0.9261000156402588]

11. 모델 성능 높이기(이미지 보강, Image Augmentation)

from tensorflow.keras.preprocessing.image import ImageDataGenerator

image_generator = ImageDataGenerator(

rotation_range = 10,

zoom_range = 0.2,

share_range = 0.6,

width_shift_range = 0.1,

height_shift_range = 0.1,

horizontal_flip = True,

vertival_flip = False

)

augment_size = 200

print(x_train.shape)

print(x_train[0].shape)

# 출력 결과

(60000, 28, 28, 1)

(28, 28, 1)x_augment = image_generator.flow(np.tile(x_train[0].reshape(28 * 28 * 1), augment_size).reshape(28 * 28 * 1),

np.zeros(augment_size), batch_size = augment_size, shuffle = False).next()[0]

plt.figure(figsize = (10, 10))

for i in range(1, 101):

plt.subplot(10, 10, i)

plt.axis('off')

plt.imshow(x_augment[i - 1].reshape(28, 28), cmap = 'gray')

- 위의 코드를 사용해 학습에 사용할 데이터 추가

from tensorflow.keras.preprocessing.image import ImageDataGenerator

image_generator = ImageDataGenerator(

rotation_range = 15,

zoom_range = 0.1,

share_range = 0.6,

width_shift_range = 0.15,

height_shift_range = 0.1,

horizontal_flip = True,

vertival_flip = False

)

augment_size = 30000

random_mask = np.random.randint(x_train.shape[0], size = augment_size)

x_augmented = x_train[random_mask].copy()

y_augmented = y_train[random_mask].copy()

x_augmented = image_generator.flow(x_augmented, np.zeros(augment_size),

batch_size = augment_size, shuffle = False).next()[0]

x_train = np.concatenate((x_train, x_augmented))

y_train = np.concatenate((y_train, y_augmented))

# 생성한 augment 30000개가 더 추가됨

print(x_train.shape)

# 출력 결과

(90000, 28, 28, 1)

- 모델 학습 및 결과 시각화

model_4 = build_model_3()

model_4.summary()

# 출력 결과

Model: "model_4"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_5 (InputLayer) [(None, 28, 28, 1)] 0

conv2d_13 (Conv2D) (None, 28, 28, 32) 320

conv2d_14 (Conv2D) (None, 26, 26, 64) 18496

max_pooling2d_5 (MaxPooling (None, 13, 13, 64) 0

2D)

dropout_6 (Dropout) (None, 13, 13, 64) 0

conv2d_15 (Conv2D) (None, 13, 13, 128) 73856

conv2d_16 (Conv2D) (None, 11, 11, 256) 295168

max_pooling2d_6 (MaxPooling (None, 5, 5, 256) 0

2D)

dropout_7 (Dropout) (None, 5, 5, 256) 0

flatten_4 (Flatten) (None, 6400) 0

dense_12 (Dense) (None, 256) 1638656

dropout_8 (Dropout) (None, 256) 0

dense_13 (Dense) (None, 100) 25700

dropout_9 (Dropout) (None, 100) 0

dense_14 (Dense) (None, 10) 1010

=================================================================

Total params: 2,053,206

Trainable params: 2,053,206

Non-trainable params: 0

_________________________________________________________________hist_4 = model_4.fit(x_train, y_train,

epochs = 25,

validation_spli = 0.3,

batch_size = 128)

plt.figure(figsize = (12, 4))

plt.subplot(1, 2, 1)

plt.plot(hist_4.history['loss'], 'b--', label = 'loss')

plt.plot(hist_4.history['val_loss'], 'r:', label = 'val_loss')

plt.xlabel('Epochs')

plt.grid()

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(hist_4.history['acc'], 'b--', label = 'accuracy')

plt.plot(hist_4.history['val_acc'], 'r:', label = 'val_accuracy')

plt.xlabel('Epochs')

plt.grid()

plt.legend()

model_4.evaluate(x_test, y_test)

# 출력 결과

loss: 0.2023 - acc: 0.9313

[0.2023032009601593, 0.9312999844551086]

- 학습 인자를 이전과 다르게 주면서 학습하면 더 잘 나올 것

'Python > Deep Learning' 카테고리의 다른 글

| [딥러닝-케라스] 케라스 전이학습 (1) | 2023.05.17 |

|---|---|

| [딥러닝-케라스] 케라스 CIFAR10 CNN 모델 (0) | 2023.05.16 |

| [딥러닝-케라스] 케라스 컨볼루션 신경망 (0) | 2023.05.13 |

| [딥러닝-텐서플로우] 텐서플로우 Data API (1) | 2023.05.12 |

| [딥러닝-케라스] 케라스의 다양한 학습 기술 (0) | 2023.05.11 |