● 케라스 전이학습(tramsfer learning)

- 새로운 모델을 만들때 기존에 학습된 모델을 사용

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPool2D, Dense, Flatten, BatchNormalization, Activation

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.applications import *

# 예시로 학습된 vgg 데이터 불러오기

vgg16 = VGG16(weights = 'imagenet',

input_shape = (32, 32, 3), include_top = False)

model = Sequential()

model.add(vgg16)

model.add(Flatten())

model.add(Dense(256))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dense(10, activation = 'softmax'))

model.summary()

# 출력 결과

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

vgg16 (Functional) (None, 1, 1, 512) 14714688

flatten (Flatten) (None, 512) 0

dense (Dense) (None, 256) 131328

batch_normalization (BatchN (None, 256) 1024

ormalization)

activation (Activation) (None, 256) 0

dense_1 (Dense) (None, 10) 2570

=================================================================

Total params: 14,849,610

Trainable params: 14,849,098

Non-trainable params: 512

_________________________________________________________________- vgg16 이외에 MobileNet, ResNet50, Xceoption 모델 등이 존재하여 전이 학습에 이용가능

1. 예제: Dogs vs Cats

- Kaggle 데이터 이용(https://www.kaggle.com/c/dogs-vs-cats/data)

- ImageDataGenerator의 flow_from_directory 이용

- modules import

import tensorflow as tf

from tensorflow.keras.preprocessing.image import array_to_img, img_to_array, load_img, ImageDataGenerator

from tensorflow.keras.layers import Conv2D, Flatten, MaxPool2D, Input, Dropout, Dense

from tensorflow.keras import Model

from tensorflow.keras.optimizers import Adam

import os

import zipfile

import matplotlib.image as mpimg

import matplotlib.pyplot as plt

- 데이터 로드

# 외부에서 데이터 가져오기

import wget

wget.download("https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip")

# 압축 해제

local_zip = 'cats_and_dogs_filtered.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

# 현재 폴더에 압축해제

zip_ref.extractall()

zip_ref.close()

# 압축해제된 폴더를 기본 경로로 지정, 폴더 내의 train과 validation 폴더에 각각 접근

base_dir = 'cats_and_dogs_filtered'

train_dir = os.path.join(base_dir, 'train')

validation_dir = os.path.join(base_dir, 'validation')

# 압축해제된 폴더 내의 train cat, validation cat, train dog, validation dog 폴더에 각각 접근

train_cats_dir = os.path.join(train_dir, 'cats')

train_dogs_dir = os.path.join(train_dir, 'dogs')

validation_cats_dir = os.path.join(validation_dir, 'cats')

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

train_cat_frames = os.listdir(train_cats_dir)

train_dog_frames = os.listdir(train_dogs_dir)

- 이미지 보강된 데이터 확인

# ImageDataGenerator 정의

datagen = ImageDataGenerator(

rotation_range = 40,

width_shift_range = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True,

fill_mode = 'nearest'

)

# 이미지 로드

img_path = os.path.join(train_cats_dir, train_cat_frames[2])

img = load_img(img_path, target_size = (150, 150))

x = img_to_array(img)

x = x.reshape((1, ) + x.shape)

i = 0

for batch in datagen.flow(x, batch_size = 1):

plt.figure(i)

imgplot = plt.imshow(array_to_img(batch[0]))

i += 1

if i % 5 == 0:

break

- 학습, 검증 데이터셋의 Data Generator

train_datagen = ImageDataGenerator(

rescale = 1. / 255,

rotation_range = 40,

width_shift_range = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True

)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary'

)

val_datagen = ImageDataGenerator(rescale = 1. / 255)

validation_generator = val_datagen.flow_from_directory(

validation_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary'

)

# 출력 결과

Found 2000 images belonging to 2 classes.

Found 1000 images belonging to 2 classes.

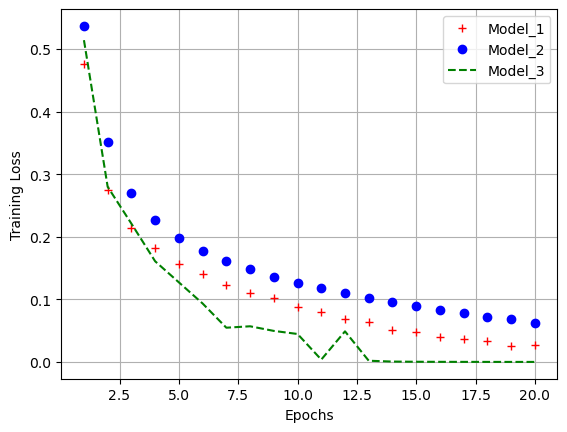

- 모델 구성 및 컴파일

model = Sequential()

model.add(Conv2D(32, (3, 3), activation = 'relu', input_shape = (150, 150, 3)))

model.add(MaxPool2D(2, 2))

model.add(Conv2D(64, (3, 3), activation = 'relu'))

model.add(MaxPool2D(2, 2))

model.add(Conv2D(128, (3, 3), activation = 'relu'))

model.add(MaxPool2D(2, 2))

model.add(Conv2D(128, (3, 3), activation = 'relu'))

model.add(MaxPool2D(2, 2))

model.add(Flatten())

model.add(Dropout(0.5))

model.add(Dense(512, activation = 'relu'))

model.add(Dense(1, activation = 'sigmoid'))

model.compile(loss = 'binary_crossentropy',

optimizer = Adam(learning_rate = 1e-4),

metrics = ['acc'])

model.summary()

# 출력 결과

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 148, 148, 32) 896

max_pooling2d (MaxPooling2D (None, 74, 74, 32) 0

)

conv2d_1 (Conv2D) (None, 72, 72, 64) 18496

max_pooling2d_1 (MaxPooling (None, 36, 36, 64) 0

2D)

conv2d_2 (Conv2D) (None, 34, 34, 128) 73856

max_pooling2d_2 (MaxPooling (None, 17, 17, 128) 0

2D)

conv2d_3 (Conv2D) (None, 15, 15, 128) 147584

max_pooling2d_3 (MaxPooling (None, 7, 7, 128) 0

2D)

flatten_1 (Flatten) (None, 6272) 0

dropout (Dropout) (None, 6272) 0

dense_2 (Dense) (None, 512) 3211776

dense_3 (Dense) (None, 1) 513

=================================================================

Total params: 3,453,121

Trainable params: 3,453,121

Non-trainable params: 0

_________________________________________________________________

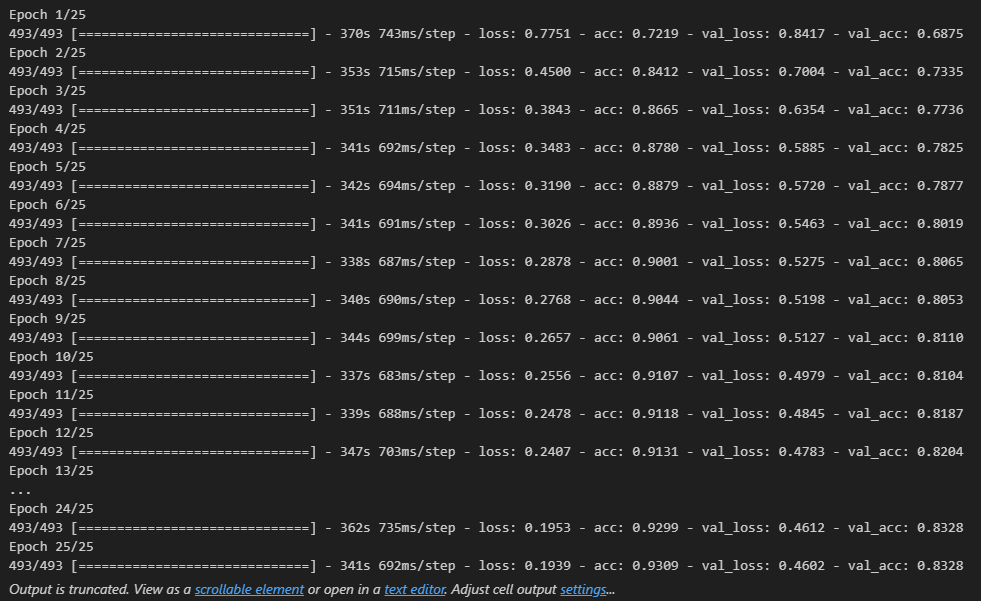

- 모델 학습 및 학습 과정 시각화

history = model.fit(train_generator,

steps_per_epoch = 100,

epochs = 30,

batch_size = 256,

validation_data = validation_generator,

validation_steps = 50,

verbose = 2)

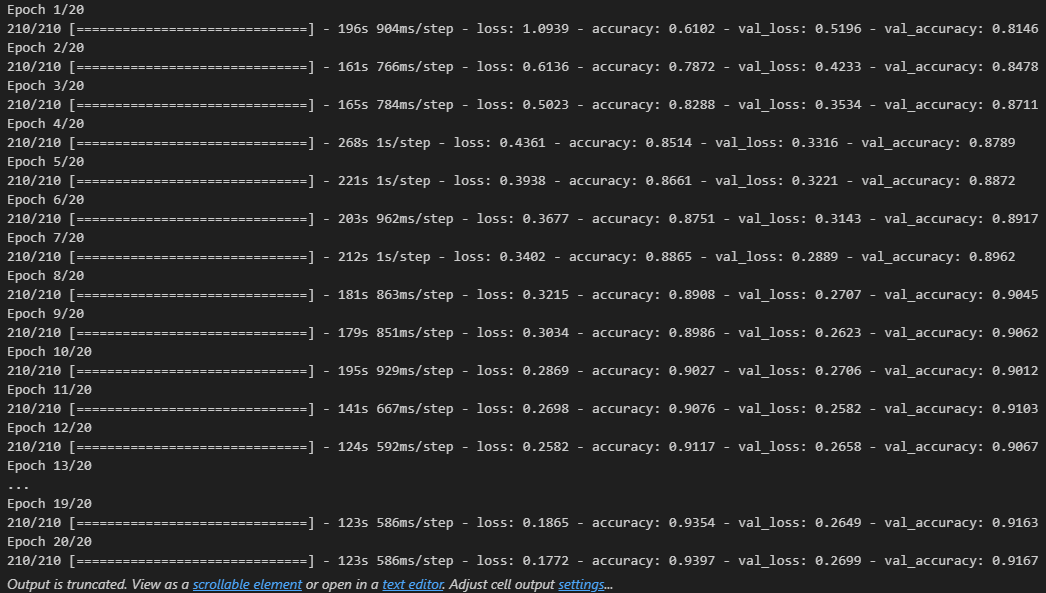

# 시각화

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, loss, 'b--', label = 'Train Loss')

plt.plot(epochs, val_loss, 'b--', label = 'Validation Loss')

plt.grid()

plt.legend()

plt.plot(epochs, acc, 'b--', label = 'Train Accuracy')

plt.plot(epochs, val_acc, 'b--', label = 'Validation Accuracy')

plt.grid()

plt.legend()

plt.show()

- 모델 저장

model.save('cats_and_dogs_model.h5')

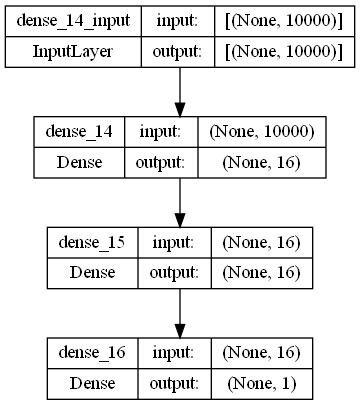

- 사전 훈련된 모델 사용

from tensorflow.keras.optimizers import RMSprop

conv_base = VGG16(weights = 'imagenet',

input_shape = (150, 150, 3), include_top = False)

def build_model_with_pretrained(convbase):

model = Sequential()

model.add(conv_base)

model.add(Flatten())

model.add(Dense(256, activation = 'relu'))

model.add(Dense(1, activation = 'sigmoid'))

model.compile(loss = binary_crossentropy,

optimizer = RMSprop(learning_rate = 2e-5),

metrics = ['accuracy'])

return model- 파라미터 수 확인

model.build_model_with_pretrained(conv_base)

model.summary()

# 출력 결과

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

vgg16 (Functional) (None, 4, 4, 512) 14714688

flatten_2 (Flatten) (None, 8192) 0

dense_4 (Dense) (None, 256) 2097408

dense_5 (Dense) (None, 1) 257

=================================================================

Total params: 16,812,353

Trainable params: 16,812,353

Non-trainable params: 0

_________________________________________________________________

- 레이어 동결

- 훈련하기 전, 합성곱 기반 레이어들의 가중치 학습을 막기 위해 이를 동결

# 동결 전

print(len(model.trainable_weights))

# 출력 결과

30

# 동결 후

conv_base.trainable = False

print(len(model.trainable_weights))

# 출력 결과

4

- 모델 컴파일

- trainable 속성을 변경했기 때문에 다시 모델을 컴파일 해야함

model.compile(loss = 'binary_crossentropy',

optimizer = RMSprop(learning_rate = 2e-5),

metrics = ['accuracy'])

- 이미지 제너레이터

train_datagen = ImageDataGenerator(

rescale = 1. / 255,

rotation_range = 40,

width_shift_range = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True

)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary'

)

val_datagen = ImageDataGenerator(rescale = 1. / 255)

validation_generator = val_datagen.flow_from_directory(

validation_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary'

)

# 출력 결과

Found 2000 images belonging to 2 classes.

Found 1000 images belonging to 2 classes.

- 모델 재학습

history2 = model.fit(train_generator,

steps_per_epoch = 100,

epochs = 30,

batch_size = 256,

validation_data = validation_generator,

validation_steps = 50,

verbose = 2)

acc = history2.history['accuracy']

val_acc = history2.history['val_accuracy']

loss = history2.history['loss']

val_loss = history2.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, loss, 'b--', label = 'Train Loss')

plt.plot(epochs, val_loss, 'r:', label = 'Validation Loss')

plt.grid()

plt.legend()

plt.plot(epochs, acc, 'b--', label = 'Train Accuracy')

plt.plot(epochs, val_acc, 'r:', label = 'Validation Accuracy')

plt.grid()

plt.legend()

plt.show()

- 모델 저장

model.save('cats_and_dogs_with_pretrained_model.h5')

2. Feature Map 시각화

- 모델 구성

import numpy as np

from tensorflow.keras.models import load_model

from tensorflow.keras.preprocessing import image

# 저장된 모델 로드

model = load_model('cats_and_dogs_model.h5')

model.summary()

# 출력 결과

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 148, 148, 32) 896

max_pooling2d (MaxPooling2D (None, 74, 74, 32) 0

)

conv2d_1 (Conv2D) (None, 72, 72, 64) 18496

max_pooling2d_1 (MaxPooling (None, 36, 36, 64) 0

2D)

conv2d_2 (Conv2D) (None, 34, 34, 128) 73856

max_pooling2d_2 (MaxPooling (None, 17, 17, 128) 0

2D)

conv2d_3 (Conv2D) (None, 15, 15, 128) 147584

max_pooling2d_3 (MaxPooling (None, 7, 7, 128) 0

2D)

flatten_1 (Flatten) (None, 6272) 0

dropout (Dropout) (None, 6272) 0

dense_2 (Dense) (None, 512) 3211776

dense_3 (Dense) (None, 1) 513

=================================================================

Total params: 3,453,121

Trainable params: 3,453,121

Non-trainable params: 0

_________________________________________________________________img_path = 'cats_and_dogs_filtered/validation/dogs/dog.2000.jpg'

img = image.load_img(img_path, target_size = (150, 150))

img_tensor = image.img_to_array(img)

img_tensor = img_tensor[np.newaxis, ...]

img_tensor /= 255.

print(img_tensor.shape)

# 출력 결과

(1, 150, 150, 3)plt.imshow(img_tensor[0])

plt.show()

# 레이어 중 일부만(8개) 출력

conv_output = [layer.output for layer in model.layer[:8]]

conv_output

# 출력 결과

[<KerasTensor: shape=(None, 148, 148, 32) dtype=float32 (created by layer 'conv2d')>,

<KerasTensor: shape=(None, 74, 74, 32) dtype=float32 (created by layer 'max_pooling2d')>,

<KerasTensor: shape=(None, 72, 72, 64) dtype=float32 (created by layer 'conv2d_1')>,

<KerasTensor: shape=(None, 36, 36, 64) dtype=float32 (created by layer 'max_pooling2d_1')>,

<KerasTensor: shape=(None, 34, 34, 128) dtype=float32 (created by layer 'conv2d_2')>,

<KerasTensor: shape=(None, 17, 17, 128) dtype=float32 (created by layer 'max_pooling2d_2')>,

<KerasTensor: shape=(None, 15, 15, 128) dtype=float32 (created by layer 'conv2d_3')>,

<KerasTensor: shape=(None, 7, 7, 128) dtype=float32 (created by layer 'max_pooling2d_3')>]activation_model = Model(inputs = [model.input], outputs = conv_output)

activations = activation_model.predict(img_tensor)

len(activations)

# 출력 결과

8

- 시각화

print(activations[0].shape)

plt.matshow(activations[0][0, :, :, 7], cmap = 'viridis')

plt.show()

# 출력 결과

(1, 148, 148, 32)

print(activations[0].shape)

plt.matshow(activations[0][0, :, :, 10], cmap = 'viridis')

plt.show()

# 출력 결과

(1, 148, 148, 32)

- 중간의 모든 활성화에 대해 시각화

# 각 layer에서 이미지의 변환과정을 시각화

layer_names = []

for layer in model.layers[:8]:

layer_names.append(layer.name)

images_per_row = 16

for layer_name, layer_activation in zip(layer_names, activations):

num_features = layer_activation.shape[-1]

size = layer_activation.shape[1]

num_cols = num_features // images_per_row

display_grid = np.zeros((size * num_cols, size * images_per_row))

for col in range(num_cols):

for row in range(images_per_row):

channel_image = layer_activation[0, :, :, col * images_per_row + row]

channel_image -= channel_image.mean()

channel_image /= channel_image.std()

channel_image *= 64

channel_image += 128

channel_image =np.clip(channel_image, 0, 255).astype('unit8')

display_grid[col * size : (col + 1) * size, row * size : (row + 1) * size] = channel_image

scale = 1. / size

plt.figure(figsize = (scale * display_grid.shape[1],

scale * display_grid.shape[0]))

plt.title(layer_name)

plt.grid(False)

plt.imshow(display_grid, aspect = 'auto', cmap = 'viridis')

plt.show()

'Python > Deep Learning' 카테고리의 다른 글

| [딥러닝-케라스] 케라스 텍스트 처리 및 임베딩(2) (0) | 2023.05.18 |

|---|---|

| [딥러닝-케라스] 케라스 텍스트 처리 및 임베딩(1) (0) | 2023.05.17 |

| [딥러닝-케라스] 케라스 CIFAR10 CNN 모델 (0) | 2023.05.16 |

| [딥러닝-케라스] 케라스 Fashion MNIST CNN 모델 (0) | 2023.05.14 |

| [딥러닝-케라스] 케라스 컨볼루션 신경망 (0) | 2023.05.13 |